The Ethics of AI in Mental Health Data Collection

The Role of Human Oversight and Ethical Guidelines

The Importance of Human Judgment in AI Systems

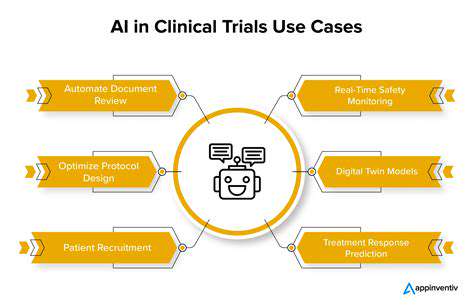

AI systems, while powerful, are not infallible. They are trained on vast datasets, but their understanding of context and nuance can be limited. Human oversight is crucial for ensuring that AI systems operate ethically and responsibly. This involves carefully analyzing the data used to train the system, identifying potential biases, and developing mechanisms for human intervention when necessary. Without human oversight, AI systems risk perpetuating harmful stereotypes or making decisions that have unintended negative consequences.

A critical aspect of human oversight is the ability to interpret and assess the output of an AI system. While AI can provide valuable insights and predictions, it's essential to have human analysts who can evaluate the results in the context of real-world situations. This evaluation process can identify areas where the AI system may be faltering, where its predictions are inaccurate, or where human judgment and intervention are required to achieve the desired outcome.

Ethical Considerations in AI Development and Deployment

The development and deployment of AI systems raise a multitude of ethical concerns. These concerns range from issues of data privacy and security to questions about accountability and fairness. Ensuring that AI systems are developed and deployed responsibly requires careful consideration of the potential impact on individuals and society as a whole. This means engaging in ongoing dialogue with stakeholders, and incorporating their concerns and perspectives into the design and implementation process.

Another crucial element of ethical AI development is the prevention of bias. AI systems learn from the data they are trained on, and if that data reflects existing societal biases, the AI system may perpetuate and even amplify those biases. Addressing this issue requires careful data curation and rigorous testing procedures, as well as ongoing monitoring and evaluation to identify and mitigate any biases that may emerge over time. Furthermore, clear guidelines and regulations are needed to ensure that AI systems are used in a way that respects human rights and promotes social justice.

Mitigating Risks and Ensuring Transparency

Implementing effective safeguards is essential for mitigating the risks associated with AI systems. Transparency in the workings of AI systems is crucial to building trust and accountability. When the decision-making processes of AI systems are opaque, it's difficult to understand how they arrive at their conclusions. This lack of transparency can lead to distrust and a reluctance to adopt AI systems in various applications, and it also makes it difficult to identify and correct errors.

Developing clear and accessible methods for explaining AI decisions is essential. This includes providing explanations for why a particular outcome was reached, and identifying the factors that contributed to the decision. This transparency fosters trust and understanding, enabling stakeholders to evaluate the decisions made by AI systems and to identify potential biases or errors.

Robust auditing and oversight mechanisms are also critical for ensuring that AI systems are aligned with ethical principles and societal values. By establishing clear guidelines and procedures for auditing AI systems, we can identify potential risks and vulnerabilities, and implement corrective actions promptly. Regular monitoring and evaluation of AI systems' performance can help to identify and address any emerging issues or concerns.

Read more about The Ethics of AI in Mental Health Data Collection

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers