AI Assisted Diagnosis: Speeding Up Mental Health Care

Ethical Considerations and Future Implications

Data Privacy and Security

Protecting patient data is paramount in AI-assisted diagnosis. Robust security measures are crucial to prevent unauthorized access, breaches, and misuse of sensitive medical information. This includes encryption, access controls, and rigorous auditing procedures. Furthermore, clear and transparent data usage policies must be established and communicated to patients to ensure their informed consent and understanding of how their data is being utilized for diagnostic purposes. Failure to address these concerns could lead to significant legal and reputational risks for healthcare institutions and erode public trust.

Bias in Algorithms

AI algorithms are trained on vast datasets, and if these datasets reflect existing societal biases, the algorithms themselves can perpetuate and even amplify those biases. For example, if a dataset predominantly includes data from one demographic group, the AI system might perform less accurately for other groups. It is essential to meticulously evaluate the training data for potential biases and develop strategies to mitigate them. This requires diverse and representative datasets, ongoing monitoring, and continuous evaluation of the algorithm's performance across different patient populations.

Addressing algorithmic bias is not just a technical challenge; it's also an ethical one, demanding careful consideration of the potential for discrimination and unfair outcomes.

Transparency and Explainability

Understanding how an AI system arrives at a diagnosis is crucial for building trust and ensuring appropriate clinical oversight. Opaque black box algorithms can make it difficult to identify errors or understand the reasoning behind a particular diagnosis. Developing transparent and explainable AI (XAI) models is essential to allow clinicians to understand the rationale behind AI-generated recommendations and to integrate them effectively into the clinical workflow. This transparency fosters greater confidence in the AI's output and allows for more informed clinical decision-making.

Clinical Oversight and Validation

AI-assisted diagnosis should not replace human clinicians but rather augment their expertise. A robust system of clinical oversight and validation is critical to ensure that AI recommendations are accurate and reliable. Clinicians need to be empowered to review AI-generated diagnoses, understand the limitations of the system, and make final decisions based on a holistic patient assessment. This collaborative approach balances the speed and efficiency of AI with the critical judgment and experience of human professionals.

A strong focus on validation and verification is essential to build trust in the accuracy and reliability of AI-driven diagnostic tools.

Potential Impact on Healthcare Professionals

The integration of AI into healthcare will undoubtedly change the role of healthcare professionals. Clinicians may need to adapt their skills to work alongside AI tools, focusing on tasks requiring critical thinking, complex judgment, and patient interaction. This evolution requires investment in education and training programs to equip healthcare professionals with the necessary competencies to effectively utilize AI-powered diagnostic tools. It's also important to consider the potential for job displacement and to develop strategies for supporting transitions and retraining.

Accessibility and Equity

Ensuring equitable access to AI-assisted diagnostic tools is critical. The cost of implementing and maintaining these systems, as well as the digital literacy needed to utilize them, can create disparities in access. Strategies to address these disparities need to be developed, including affordable access options and digital literacy programs for underserved populations. This will help ensure that the benefits of AI-assisted diagnosis are broadly shared and contribute to a more equitable healthcare system.

Future Implications for Healthcare Systems

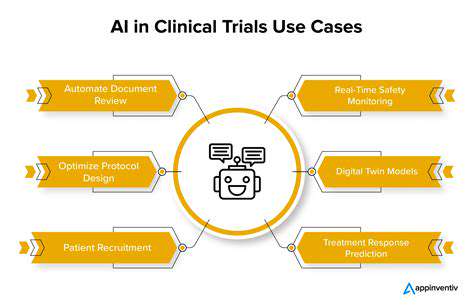

The integration of AI in healthcare promises to revolutionize diagnostic processes, but its long-term implications for healthcare systems are multifaceted and require careful consideration. This includes the potential for streamlining workflows, optimizing resource allocation, and improving patient outcomes. However, challenges such as system integration, workforce adaptation, and the management of rapidly evolving technology need to be proactively addressed. A thoughtful and strategic approach is necessary to navigate these complexities and leverage AI's potential to create a more efficient and effective healthcare system.

Read more about AI Assisted Diagnosis: Speeding Up Mental Health Care

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers