The Ethical AI: Responsible Innovation in Mental Wellness

Bias Mitigation and Fairness in AI Systems

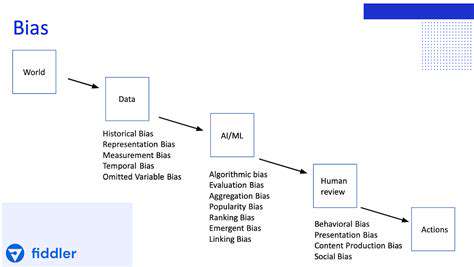

Understanding Bias in AI Systems

While artificial intelligence offers tremendous capabilities, these systems often reflect the biases embedded in their training data. Consider how facial recognition software has historically struggled with accuracy across different ethnicities - this illustrates how technology can unintentionally perpetuate social inequities. The first step toward equitable AI involves acknowledging these systemic biases exist within algorithms.

Architectural decisions during development can introduce additional biases. The selection criteria for model features or how different variables are weighted might systematically advantage certain populations. This reality demands holistic bias mitigation strategies that address both data and design elements.

Data Collection and Preprocessing

Effective bias reduction begins with conscientious data practices. Training datasets must reflect the full spectrum of populations they'll serve. Researchers should actively source information from marginalized communities that are often underrepresented in technical datasets. For instance, medical AI trained primarily on male patients frequently delivers suboptimal results for female patients.

Advanced preprocessing techniques like synthetic minority oversampling can help balance datasets. However, practitioners must recognize that technical solutions alone can't resolve all representation issues - human judgment remains essential for identifying subtle biases.

Algorithmic Fairness Metrics

Quantitative fairness measures provide essential safeguards against discriminatory AI outcomes. These metrics evaluate whether different demographic groups receive equitable treatment from algorithms. Selection of appropriate metrics depends heavily on context - statistical parity might suit hiring algorithms while equalized odds could better serve medical diagnostics.

Fairness assessments should examine multiple dimensions including false positive/negative rates across groups and predictive accuracy disparities. Regular metric evaluation helps maintain fairness as data distributions evolve over time.

Model Explainability and Interpretability

Transparent AI decision-making processes enable bias detection and correction. Techniques like LIME (Local Interpretable Model-Agnostic Explanations) reveal the specific factors influencing individual predictions, allowing developers to identify problematic patterns. When a loan approval model disproportionately weights zip codes, this becomes visible through proper explanation methods.

Continuous Monitoring and Evaluation

Algorithmic fairness requires ongoing vigilance rather than one-time fixes. Organizations should establish permanent fairness review boards that regularly audit AI systems using updated evaluation frameworks. These teams should include domain experts who can recognize emerging bias patterns that quantitative metrics might miss.

Periodic bias testing becomes especially crucial when models are deployed in new regions or adapted for different use cases, as previously minor biases can become significant in altered contexts.

Data Privacy and Security in Mental Health AI

Data Minimization and Purpose Limitation

The sensitive nature of mental health data demands strict collection protocols. Following the principle of data economy, systems should gather only essential information - collecting depression screening scores rather than complete medical histories for basic mood tracking apps. Each data field should have a documented therapeutic justification to prevent function creep.

Transparency and Explainability

Mental health AI must demystify its operations for both clinicians and patients. Detailed documentation should explain how algorithms process sensitive information and what factors influence recommendations. When an AI suggests adjusting medication, it should provide the clinical indicators that prompted this suggestion, enabling professional verification.

Bias Mitigation and Fairness

Cultural competence in mental health AI requires diverse training datasets that account for variations in symptom presentation across demographics. For example, depression manifests differently in collectivist versus individualist cultures - algorithms must recognize these nuances. Regular bias audits should evaluate whether diagnostic accuracy remains consistent across population subgroups.

Data Security and Confidentiality

Beyond standard encryption, mental health AI systems need specialized protections like differential privacy techniques that prevent identification from sensitive data. Breach response plans must account for the particular risks of mental health data exposure, including provisions for psychological support if sensitive information is compromised.

Patient Consent and Control

Consent processes for mental health AI should use plain language explanations and allow granular data sharing options. Patients might consent to share mood data with therapists but not with researchers. Dynamic consent interfaces could let patients adjust permissions as their comfort levels change during treatment.

Accountability and Governance

Mental health AI demands clear responsibility structures. Clinical oversight committees should include ethicists who can evaluate algorithmic recommendations against therapeutic best practices. Incident reporting systems must capture not just data breaches but also clinical concerns about algorithmic outputs.

Ensuring Human Oversight and Collaboration

Defining Human Oversight in AI Systems

Effective human oversight combines technical monitoring with ethical judgment. Clinical AI systems might employ psychiatrist review panels that sample algorithmic decisions, while allowing clinicians to override questionable recommendations. This dual-layer approach maintains efficiency while preserving human judgment for complex cases.

Establishing Clear Guidelines and Ethical Frameworks

AI ethics frameworks should evolve alongside technological capabilities. A living document approach allows organizations to update principles as new challenges emerge - like addressing microaggressions in therapeutic chatbots. Regular impact assessments can anticipate how guideline changes might affect vulnerable populations.

Promoting Transparency and Explainability in AI

Explainability features should accommodate different user needs. Clinicians might require detailed feature importance metrics, while patients benefit from simplified explanations using relatable analogies. Multi-layered explanation systems can serve both technical and non-technical stakeholders effectively.

Fostering Interdisciplinary Collaboration

AI development teams for sensitive applications should include practicing clinicians who can identify real-world implementation challenges. Anthropologists can help recognize cultural factors that quantitative data might miss. This diversity prevents narrow technical perspectives from dominating system design.

Developing Robust Mechanisms for Accountability

Accountability systems should track both algorithmic performance and human review actions. If clinicians frequently override certain types of recommendations, this signals potential algorithm issues. Such feedback loops create continuous improvement cycles for both AI and human decision-making.

Encouraging Public Engagement and Dialogue

Community review boards comprising patients, advocates and ethicists can provide ground truth testing for AI systems. Participatory design workshops help ensure technologies actually meet user needs rather than developer assumptions. These processes are especially crucial for marginalized communities.

Prioritizing Human Values and Well-being in AI Design

Therapeutic AI should enhance rather than replace human connections. Systems might prompt clinicians to explore topics the patient avoids, while always preserving the human element of care. Well-designed AI becomes a collaborative tool that amplifies therapeutic relationships instead of displacing them.

The Future of AI-Enhanced Mental Wellness

AI's Potential to Diagnose Mental Health Conditions

Emerging multimodal AI systems can correlate speech patterns, typing rhythms, and physiological markers to detect subtle mental state changes. These systems don't replace clinical assessment but provide objective data points that human practitioners might overlook during brief consultations.

Longitudinal analysis capabilities allow AI to identify gradual changes that might signal developing conditions, enabling preventative interventions before crises occur. This represents a paradigm shift from reactive to proactive mental healthcare.

Personalized Treatment Plans

AI-powered treatment personalization considers hundreds of variables from genetic markers to lifestyle factors. The system might suggest adjusting therapy approaches based on detected learning styles or modifying medication timing according to circadian rhythms. This precision medicine approach could significantly improve treatment adherence and outcomes.

Improved Accessibility to Mental Health Services

AI triage systems can manage initial assessments, directing users to appropriate care levels while providing immediate coping tools. In rural areas with clinician shortages, these systems maintain basic support between in-person sessions, helping prevent treatment gaps that worsen outcomes.

Enhanced Mental Health Monitoring and Support

Wearable-integrated AI can detect early warning signs like sleep disturbances or social withdrawal patterns. Discreet alerts encourage users to practice coping strategies before symptoms escalate, creating a continuous care model that traditional psychiatry struggles to provide.

Ethical Considerations and Challenges

The expansion of mental health AI necessitates robust oversight mechanisms. Algorithmic audits should evaluate whether systems disproportionately flag certain demographics as high-risk. Transparency reports detailing system limitations help prevent overreliance on algorithmic assessments.

The Role of Human Clinicians

The most effective implementations position AI as a clinical decision support tool. Algorithms surface relevant information while clinicians focus on therapeutic rapport and nuanced interpretation, combining technological scale with human wisdom. This collaborative model preserves the art of psychiatry while enhancing its science.

Read more about The Ethical AI: Responsible Innovation in Mental Wellness

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers