The Role of AI in Sustainable Mental Health Advocacy and Awareness

Boosting Mental Health Literacy Through AI-Generated Content

Enhancing Accessibility to Mental Health Resources

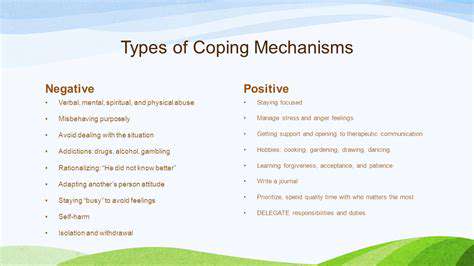

Mental health information has become more accessible to diverse populations, including those in remote or underserved regions, thanks to AI-powered solutions. These platforms utilize advanced computational techniques to provide educational materials in multiple languages and formats—text, audio, and video—to suit various learning preferences. This ensures that individuals without direct access to mental health professionals still receive accurate, timely insights into common mental health challenges, coping mechanisms, and available support options.

Interactive tools like AI-driven chatbots offer immediate responses to user inquiries, guiding them through complex mental health topics with sensitivity. These platforms create a safe, private space for users to seek information without fear of judgment. By removing geographic, linguistic, and social barriers, AI-driven content fosters mental health awareness and builds stronger, more resilient communities.

Personalized Learning and Support for Mental Health Awareness

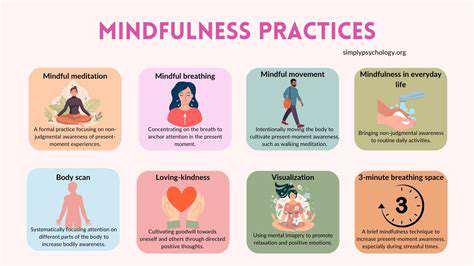

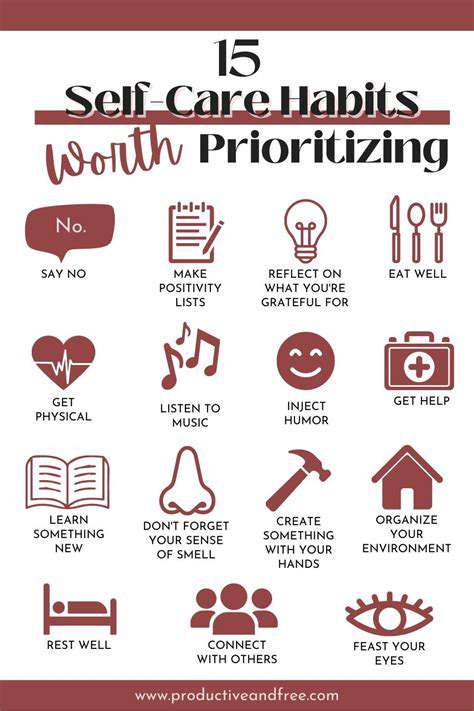

The adaptability of AI-generated content allows for highly personalized learning experiences, which significantly improve mental health understanding. By analyzing user behavior and preferences, these systems deliver customized modules tailored to individual needs. For instance, someone experiencing stress might receive targeted resources on stress management techniques, mindfulness practices, or situation-specific coping strategies.

Moreover, AI platforms continuously refine content based on user feedback and progress, ensuring relevance and effectiveness. This dynamic approach keeps users engaged in their mental health journey, empowering them to recognize early warning signs and seek appropriate care. Ultimately, personalized AI-driven education contributes to a more informed and healthier society.

Overcoming Challenges and Ethical Considerations in AI-Driven Mental Health Initiatives

Ensuring Data Privacy and Security

Protecting sensitive user data remains a top priority in AI-driven mental health programs. Given the deeply personal nature of this information, robust security protocols—such as end-to-end encryption, secure storage, and strict access controls—are essential to maintain trust and comply with regulations like GDPR and HIPAA.

Organizations must also establish transparent data policies, clearly outlining how information is collected, used, and safeguarded. Regular security assessments and cutting-edge cybersecurity measures help identify vulnerabilities and fortify defenses against potential breaches.

Addressing Bias and Ensuring Fairness

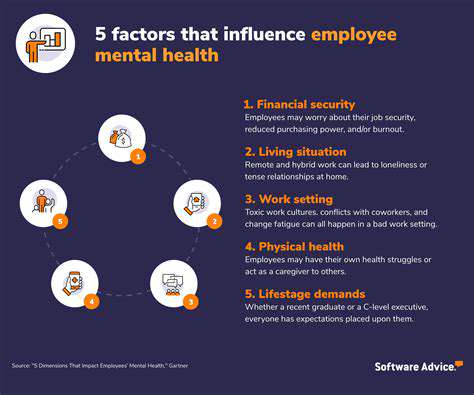

Since AI models reflect the data they’re trained on, biased datasets can lead to inequitable mental health support. It’s critical to identify and mitigate biases related to ethnicity, gender, socioeconomic status, and other factors influencing mental health outcomes.

Developers should prioritize diverse, representative datasets and routinely evaluate AI systems for biased behavior. Integrating fairness metrics and involving diverse stakeholders in development can minimize disparities and promote equitable support.

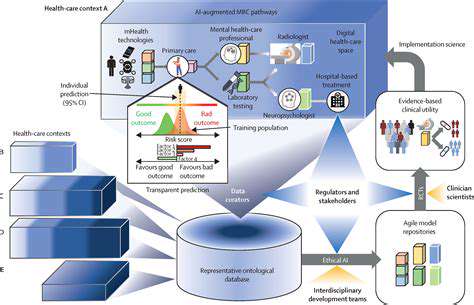

Maintaining Human-AI Collaboration Balance

While AI enhances mental health services, it cannot replicate the empathy and nuanced understanding of human professionals. Striking the right balance between automation and human interaction is key to preserving compassionate care.

AI tools should complement, not replace, clinicians. Training practitioners to effectively incorporate AI insights into their work ensures that human judgment remains central to treatment.

Managing Ethical Dilemmas in AI Deployment

AI applications in mental health raise ethical concerns around consent, autonomy, and potential harm. Users must fully understand how these systems operate and their limitations to make informed decisions.

Ethical frameworks should guide AI development, emphasizing user rights and safety. Regular ethical reviews and consultation with ethicists help navigate complex dilemmas responsibly.

Ensuring Accessibility and Inclusivity

AI-driven mental health initiatives must cater to diverse populations, including those with disabilities, language barriers, or limited tech literacy. Exclusion risks leaving vulnerable groups without support.

Designing intuitive, multilingual interfaces and ensuring affordability across devices broadens access. Prioritizing inclusivity ensures equitable benefits for all users.

Legal and Regulatory Compliance

Navigating varying regional laws on health data and AI applications is a significant hurdle for organizations. Staying updated on evolving regulations is crucial to avoid penalties and protect users.

Collaborating with legal experts and implementing comprehensive compliance strategies helps organizations adhere to standards. Regular audits ensure ongoing alignment with laws and ethics.

Promoting Transparency and User Trust

Trust is foundational for AI-driven mental health programs. Clear communication about system operations, limitations, and data practices builds confidence and encourages engagement.

Providing straightforward explanations, soliciting feedback, and addressing concerns openly enhances transparency. Detailed documentation and open communication channels foster lasting trust and accountability.

Read more about The Role of AI in Sustainable Mental Health Advocacy and Awareness

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers