The Ethical Use of AI in Personalized Psychiatry

The Promise and Peril of AI-Powered Mental Health

The Rise of Intelligent Machines

We're witnessing a seismic shift as artificial intelligence reshapes every facet of modern existence. While self-driving cars capture headlines, the quiet revolution in personalized healthcare solutions might ultimately prove more transformative. These systems excel at spotting subtle correlations in massive datasets - patterns that would elude even the most astute human analysts.

This technological evolution isn't slowing down. With each passing quarter, we see more powerful processors and sophisticated algorithms emerging. What began as academic curiosities in university labs now powers critical decision-making across global industries.

Ethical Considerations in AI Development

The sophistication of modern AI brings profound ethical questions to the forefront. Algorithmic bias doesn't just represent a technical challenge - it reflects our societal blind spots encoded in silicon. When health management systems make recommendations, we must scrutinize whether they reinforce or reduce existing disparities.

Transparency remains the cornerstone of ethical AI implementation. Without understanding how systems reach conclusions, we risk creating digital oracles that demand blind faith rather than informed trust.

The Impact on the Workforce

Job markets worldwide are experiencing AI-induced tremors. Certain roles are vanishing while entirely new categories of employment emerge overnight. This transformation demands flexible education systems that can retrain workers at the pace of technological change.

The narrative shouldn't focus solely on jobs lost. Creative positions that blend human intuition with machine analysis are multiplying. Future-proof careers will leverage uniquely human qualities that algorithms can't replicate.

The Potential for Innovation

Scientific research has entered a new paradigm thanks to AI's pattern recognition capabilities. Medical researchers now identify disease markers years before symptoms appear, while climate scientists model complex environmental interactions with unprecedented accuracy. We're not just solving problems faster - we're asking entirely new questions.

This analytical power comes with responsibility. The same tools predicting cancer risks could be misused without proper safeguards. Innovation must be paired with ethical foresight.

The Need for Responsible AI Governance

International cooperation has become critical as AI systems transcend borders. Patchwork regulations create dangerous loopholes that bad actors exploit. We need harmonized standards that protect citizens while allowing ethical innovation to flourish.

The Future of Human-AI Collaboration

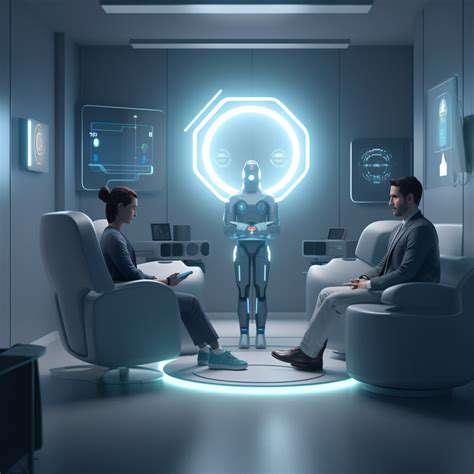

The most promising applications combine human judgment with machine precision. Clinicians using AI diagnostics report improved accuracy when they treat the algorithm as a consultative partner rather than an oracle. This balanced approach yields better outcomes than either could achieve alone.

The path forward requires dismantling false dichotomies between human and artificial intelligence. By focusing on complementary strengths, we can address challenges that have long resisted solutions.

Data Privacy and Security in AI-Driven Psychiatry

Data Minimization and Purpose Limitation

Modern psychiatry faces a paradox - deeper insights require more data, but patient trust demands restraint. Clinicians must walk this tightrope by collecting only essential information with clearly defined usage parameters. When systems gather extraneous details, they create unnecessary vulnerabilities while eroding therapeutic relationships.

Transparency and Explainability in AI Models

Therapeutic progress depends on shared understanding. When AI systems operate as inscrutable black boxes, they undermine the clinician-patient alliance. Developers must prioritize interpretable models that allow mental health professionals to explain recommendations in plain language. Detailed model documentation serves dual purposes - facilitating clinical oversight and enabling continuous improvement.

Data Security Measures and Access Controls

Cybersecurity in mental health isn't just about technology - it's about preserving human dignity. Beyond standard encryption protocols, institutions need comprehensive data governance frameworks. Regular penetration testing and mandatory security training create multiple defensive layers. The most secure systems combine technical safeguards with rigorous personnel protocols.

Patient Consent and Control over Data

True patient empowerment means moving beyond checkbox consent forms. Interactive digital interfaces should allow individuals to adjust privacy settings as their comfort evolves. Granular controls might permit data sharing for treatment while restricting research use, or vice versa. This dynamic approach respects autonomy while maintaining clinical utility.

Bias Mitigation in AI Models and Algorithms

Bias in mental health AI isn't merely a technical flaw - it's potentially life-altering. Teams must examine training data for hidden assumptions about symptom presentation across demographic groups. Continuous monitoring for disparate impact should be built into deployment plans, not added as an afterthought.

Data Retention and Disposal Policies

Thoughtful data lifecycle management reflects professional ethics. Automatic expiration protocols should purge outdated records, while secure deletion methods prevent forensic recovery. These policies require periodic review as technology and regulations evolve - what's compliant today may be inadequate tomorrow.

Algorithmic Bias and Equity in AI-Powered Mental Health Assessments

Algorithmic Bias in Mental Health Assessments

The diagnostic algorithms revolutionizing mental healthcare carry invisible baggage - the unconscious biases of their creators and the historical inequities in their training data. When systems developed primarily on urban, affluent populations encounter rural patients or marginalized communities, their assessments risk becoming dangerously misleading. These aren't abstract concerns - misapplied diagnostic criteria can steer individuals toward inappropriate treatments or away from needed care.

Cultural context shapes emotional expression in ways algorithms often miss. Depression manifests differently across ethnic groups, yet many systems use monolithic evaluation frameworks. Without deliberate efforts to capture this diversity, we risk creating digital systems that only recognize distress when it presents in culturally familiar ways.

Ensuring Equity in AI-Powered Assessments

Building equitable mental health AI requires more than technical fixes - it demands paradigm shifts. Development teams must include cultural psychiatrists and community advocates alongside data scientists. Testing protocols should emphasize performance across demographic subgroups rather than aggregate accuracy. Perhaps most crucially, we need mechanisms for continuous feedback from the communities these tools serve.

Regulatory approaches must balance innovation with protection. Rather than stifling development, thoughtful frameworks can channel creativity toward solving healthcare disparities. The most effective regulations will emerge from dialogue between technologists, clinicians, patients, and ethicists - each bringing crucial perspectives to the table.

Ultimately, the measure of these technologies won't be their sophistication but their impact. Are they reducing disparities in mental healthcare access? Are marginalized communities seeing improved outcomes? By keeping these questions central, we can ensure AI becomes a tool for mental health equity rather than another source of disparity.

Read more about The Ethical Use of AI in Personalized Psychiatry

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

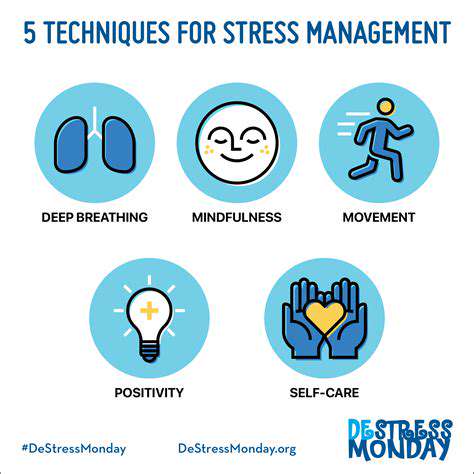

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers