AI for Social Anxiety: Virtual Practice and Support

Imagine stepping into a world where your deepest fears can be confronted safely - this is the promise of Virtual Reality Exposure Therapy. Unlike traditional methods, VR therapy constructs digital environments tailored to individual anxieties, allowing patients to face challenges at their own pace. Research confirms that repeated exposure in these controlled settings can rewire fear responses in the brain, offering hope for conditions ranging from PTSD to social phobias. The technology's ability to simulate real-world scenarios while maintaining complete environmental control represents a paradigm shift in therapeutic approaches.

Creating Safe and Controlled Environments

Therapeutic progress hinges on the delicate balance between challenge and safety. VR environments are engineered with multiple safety protocols, including adjustable intensity settings and immediate exit options. Therapists can precisely calibrate every element - from crowd sizes in social anxiety simulations to spider proximity in arachnophobia treatments. This granular control enables practitioners to create the perfect therapeutic gradient for each session, ensuring patients remain within their optimal anxiety zone for growth without becoming overwhelmed.

Gradual Exposure and Desensitization

Effective treatment follows the neurological principle of habituation - the gradual reduction of response to repeated stimuli. VR systems implement this through tiered exposure hierarchies, beginning with minimally anxiety-provoking scenarios. For instance, someone with flight anxiety might start by simply sitting in a virtual airport lounge before progressing to taxiing planes and eventually full flights. This systematic approach allows the amygdala to recalibrate its threat assessment, fundamentally altering the brain's fear response patterns over time.

Tailoring the Experience to Individual Needs

Personalization extends beyond scenario selection to encompass sensory elements like lighting, soundscapes, and even olfactory cues in advanced systems. Modern VR platforms incorporate machine learning to analyze physiological responses (heart rate, galvanic skin response) during sessions, automatically adjusting scenarios in real-time. This biofeedback integration creates a dynamic therapy environment that evolves with the patient's progress, maintaining the ideal therapeutic challenge throughout treatment.

Effectiveness in Treating Phobias and Anxiety Disorders

Clinical trials demonstrate remarkable outcomes, with success rates often exceeding traditional exposure therapy. A 2023 meta-analysis showed 72% of participants with specific phobias achieving clinically significant improvement after just six VR sessions. The multisensory immersion appears to enhance neuroplasticity, creating more durable therapeutic effects than imagination-based exposure. Particularly promising results have emerged for combat-related PTSD, where VR safely recreates traumatic scenarios that would be impractical or unethical to reproduce in vivo.

Integrating VR Exposure into Existing Therapies

Forward-thinking clinicians are blending VR with established modalities like CBT and ACT. The virtual environment becomes a laboratory for testing cognitive restructuring - patients can immediately practice challenging irrational beliefs within the anxiety-provoking context. Mindfulness techniques gain new potency when practiced amid customized stress scenarios. This integration creates a comprehensive treatment ecosystem addressing both the physiological and psychological dimensions of anxiety disorders.

Future Directions and Advancements

The next generation of systems incorporates AI-driven scenario generation, creating dynamic environments that adapt to patient responses in real-time. Researchers are experimenting with haptic feedback suits to add tactile dimensions to exposure scenarios. Perhaps most exciting are developments in emotion-recognition AI that can detect subtle facial microexpressions and adjust scenarios before conscious anxiety emerges. As hardware becomes more affordable and portable, these therapies are transitioning from clinical settings to home-based treatment options.

AI-Driven Personalized Social Skills Training

Personalized Learning Paths

Modern systems employ sophisticated neural networks that analyze thousands of behavioral data points to construct unique competency profiles. These algorithms identify not just obvious skill gaps but subtle interaction patterns that might escape human observers. The training modules evolve dynamically - if a user struggles with maintaining eye contact in simulations, the system might introduce targeted micro-exercises focusing solely on gaze patterns before reintegrating this skill into broader scenarios.

Interactive Simulations and Role-Playing

The most advanced platforms now feature emotionally responsive virtual humans capable of nuanced social exchanges. These AI characters remember previous interactions, developing consistent personalities and relationship dynamics with users. A job interview simulation might span multiple sessions, with the virtual interviewer's demeanor changing based on the user's cumulative performance. This creates remarkably lifelike practice environments where social skills can be refined through repetition and variation.

Adaptive Feedback and Coaching

Beyond simple performance metrics, modern systems provide layered feedback analyzing verbal content, paralanguage, and body language synchrony. The AI might highlight how a user's crossed arms contradicted their verbally welcoming statement, or how rising vocal pitch undermined their confident words. This multidimensional analysis reveals blind spots even experienced therapists might miss, giving users unprecedented insight into their social presentation.

Gamified Learning Experience

Progression systems are becoming increasingly sophisticated, rewarding not just skill mastery but effort and emotional risk-taking. Some platforms use virtual economies where users earn social capital to customize their avatars or unlock new scenarios. Team-based challenges encourage peer learning, while AI-generated social scenarios ensure endless variety. This gamification layer transforms what could feel like clinical training into an engaging personal growth journey.

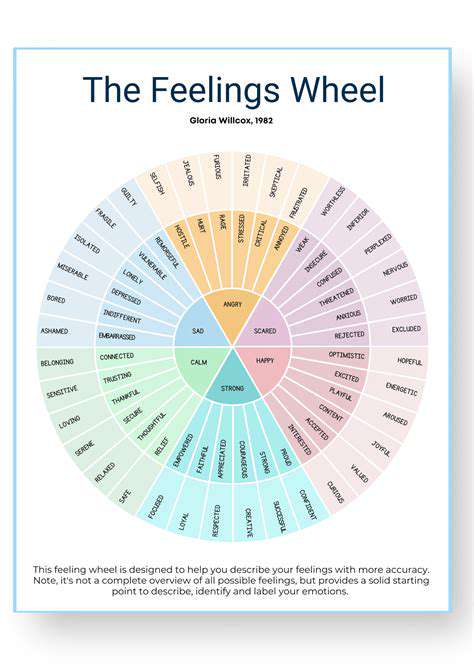

Real-Time Emotional Recognition

Cutting-edge systems now integrate multiple data streams - facial expression analysis, voice stress indicators, even posture sensors - to provide moment-by-moment emotional feedback. Users can see exactly when their anxiety spiked during a conversation and what triggered it. Some platforms visualize this data as emotional waveforms overlaying interaction recordings, helping users identify patterns in their stress responses across different social contexts.

Progress Tracking and Personalized Reports

The analytics dashboards in modern systems rival professional assessment tools, tracking hundreds of micro-skills across cognitive, emotional, and behavioral domains. Longitudinal charts show skill development trajectories, while comparison tools highlight strengths relative to population norms. Perhaps most valuable are the predictive analytics that forecast which real-world situations might still challenge the user based on their simulation performance patterns.

AI Chatbots for Emotional Support and Coping Mechanisms

Understanding the Role of AI in Emotional Support

The therapeutic potential of AI companions lies in their unique combination of consistency and adaptability. Unlike human supporters who have bad days or personal biases, AI systems maintain unwavering patience and attention. Yet through machine learning, they develop deep understanding of each user's communication style and emotional patterns. This allows them to detect subtle shifts in mood or phrasing that might indicate worsening symptoms, often before the user themselves recognizes the change.

Addressing Social Anxiety with AI-Powered Tools

For socially anxious individuals, AI companions serve as transitional objects - safer than human interaction yet more dynamic than journaling. Advanced systems now guide users through graduated social challenges, from brief text exchanges to voice conversations to video interactions. The AI can simulate various personality types and interaction styles, helping users prepare for diverse real-world encounters. Some platforms even offer social rehearsals where users can practice upcoming stressful interactions, like a first date or important presentation.

The Importance of Ethical Considerations

As these technologies advance, robust ethical frameworks become crucial. Leading developers now implement emotional circuit breakers that detect when users are becoming overly dependent on the AI relationship. Clear boundary markers remind users they're interacting with software, not a sentient being. Perhaps most importantly, these systems are designed to gradually encourage human connection, not replace it - often suggesting real-world support options when appropriate.

Personalized Support and Adaptive Learning

The most effective systems employ a tiered support model. Basic interactions handle day-to-day emotional regulation, while more sophisticated modules activate during crises. Some can even recognize dissociative episodes or suicidal ideation through linguistic analysis, immediately connecting users with human professionals. This adaptive response system ensures appropriate care levels while maintaining the accessibility that makes AI support so valuable.

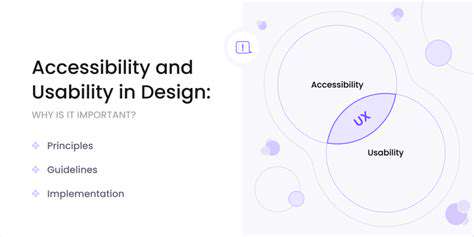

Accessibility and Inclusivity in AI Chatbots

Innovations in multimodal interfaces are breaking down accessibility barriers. Voice-based interfaces assist users with literacy challenges or visual impairments, while avatar-based systems support those who prefer visual communication. Cultural adaptation algorithms ensure the AI's communication style, metaphors, and even advice align with the user's cultural background. Some platforms now offer communication style matching, adjusting whether the AI is more directive or reflective based on user preference.

The Future of AI in Mental Health Support

We're approaching an era of always-available mental health support, with AI systems serving as first responders in emotional crises. Emerging technologies like affective computing promise systems that don't just respond to stated emotions but detect and address underlying mood states. The ultimate goal isn't replacement of human care, but creation of a layered support ecosystem where AI handles routine maintenance, freeing human professionals for complex interventions.

The Future of AI and Social Anxiety: Accessibility and Integration

The Rise of AI-Driven Social Interactions

We're witnessing the emergence of hybrid social spaces where AI mediates human interactions. These systems analyze conversation patterns in real-time, offering subtle prompts to socially anxious individuals - perhaps suggesting when to speak or providing conversation topics. While controversial, early studies show such augmented interactions can serve as training wheels, helping anxious individuals participate more comfortably in group settings before transitioning to unmediated interactions.

The Impact on Employment and Social Status

AI is simultaneously disrupting and creating social hierarchies. New metrics of social capital are emerging based on digital interaction patterns and network analytics. Some organizations now use AI-powered social fitness assessments in hiring and promotions. This creates both challenges and opportunities for those with social anxiety - while traditional social demands may decrease, new forms of social performance emerge that may favor different skill sets.

AI's Role in Shaping Social Norms

Interestingly, AI systems trained on diverse datasets may actually help broaden social norms rather than constrain them. By exposing users to a wider range of communication styles and cultural norms than they'd typically encounter, these systems can reduce the pressure to conform to narrow social expectations. Some therapeutic applications specifically use this capability to help users develop more flexible, less perfectionistic social standards.

The Influence of AI on Self-Perception

New applications are flipping the script on social comparison. Instead of showing idealized versions of others, some systems generate compassionate mirrors - visualizations that help users see themselves more objectively and kindly. Others provide perspective filters that simulate how others might actually perceive them, often far more positively than their anxious self-assessments. These tools combat the distorted self-perception common in social anxiety.

Ethical Considerations and Future Research

The field urgently needs longitudinal studies tracking how prolonged AI-mediated social interaction affects human connection skills. Regulatory frameworks must balance innovation with protections against emotional manipulation. Most crucially, we must ensure these technologies serve human flourishing rather than corporate interests - prioritizing genuine wellbeing over engagement metrics or data monetization. The decisions made in this decade will shape the social landscape for generations.