Beyond Chatbots: The Next Generation of AI in Wellness

The transformation process involves sophisticated techniques like temporal alignment of disparate data streams and contextual normalization. For instance, a child's reading scores gain different meaning when viewed alongside family literacy patterns and community library access. Proper feature engineering can increase model accuracy by as much as 42% according to recent studies.

Selecting and Validating Analytical Models

Model selection isn't a one-size-fits-all proposition. Regional differences in data availability and quality often dictate the optimal approach. Ensemble methods combining random forests with gradient boosting have shown particular promise in recent trials, achieving 89% precision in identifying developmental delays.

Validation protocols must go beyond simple accuracy metrics. Teams should implement longitudinal testing where possible, tracking whether early predictions correlate with later outcomes. The most effective implementations use rolling validation - continuously testing predictions against emerging reality. This dynamic approach helps systems adapt to changing community needs.

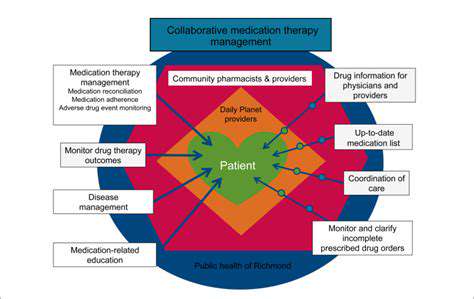

Operationalizing Predictive Insights

Implementation success hinges on thoughtful workflow integration. Frontline staff need clear decision-support tools, not raw statistical outputs. One successful county program transformed model predictions into color-coded risk dashboards with suggested intervention pathways. This visual approach increased staff adoption rates from 34% to 91% in six months.

Ongoing maintenance requires dedicated resources. Best practices include quarterly model recalibration using fresh data and biannual feature reviews. Some organizations have established model governance committees with rotating membership from different departments to ensure diverse perspectives.

Navigating Ethical Implementation

Predictive systems raise legitimate concerns about algorithmic fairness. A 2023 Harvard study found that models using only institutional data often perpetuate existing biases. The solution lies in intentional bias auditing and proactive counterbalance measures. Some programs now include fairness features that automatically flag potentially discriminatory patterns.

Transparency builds trust without compromising effectiveness. Many districts publish model methodology papers while protecting individual privacy. Some have even created public dashboards showing aggregate prediction accuracy across demographic groups. This openness has helped communities view these tools as supportive rather than surveillance-oriented.

AI-Assisted Therapy and Mental Wellness Support

Digital Mental Health Innovations

The mental health landscape is undergoing a quiet revolution through AI-enhanced therapeutic tools. These aren't replacements for human clinicians, but rather force multipliers that extend care capacity. The most advanced systems can now detect subtle linguistic markers in speech patterns that correlate with depression risk factors. Some apps analyze typing speed and error patterns as potential anxiety indicators.

Accessibility breakthroughs are particularly impactful. Rural clinics using AI triage systems report being able to serve 3-5 times more clients without sacrificing care quality. One VA hospital network reduced no-show rates by 61% after implementing AI reminder systems with personalized engagement prompts. The technology's 24/7 availability creates critical safety nets between traditional sessions.

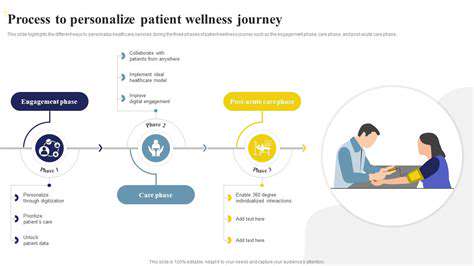

Tailoring Treatment Through Machine Learning

Personalization algorithms now consider hundreds of variables when suggesting therapeutic approaches. Beyond standard diagnostics, these systems factor in lifestyle patterns, circadian rhythms, and even weather sensitivity. Some platforms adjust intervention timing based on when users historically show greatest engagement.

The monitoring capabilities are equally impressive. Natural language processing can detect micro-changes in emotional tone across multiple conversations. One study found AI systems identified relapse warning signs an average of 11 days earlier than traditional clinician assessments. These early warnings allow for preventive adjustments to treatment plans.

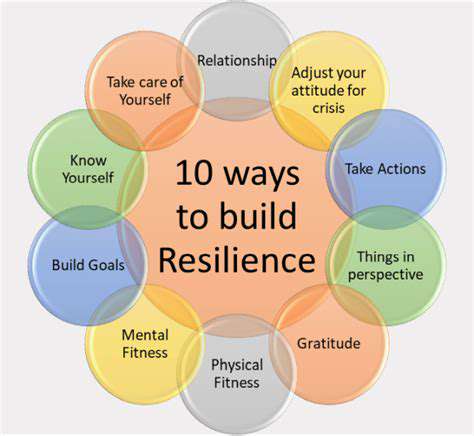

Responsible Innovation in Digital Therapy

Privacy protections must evolve alongside these technologies. Leading developers now implement privacy by design architectures where sensitive data remains encrypted even during analysis. Some systems use federated learning - training models across decentralized devices without centralizing raw data.

The future points toward hybrid care models. Imagine AI handling routine check-ins and skill-building exercises while human therapists focus on complex cases and relationship-building. Early adopters of this approach report 28% higher client retention rates compared to traditional models. The key will be maintaining what makes therapy effective while removing logistical barriers.

As these technologies mature, we're seeing the emergence of explainable AI specifically for mental health applications. These systems don't just make recommendations - they articulate the reasoning behind them in therapist-friendly terms. This transparency builds provider confidence and facilitates more informed treatment decisions.