AI for Substance Abuse Prevention: Personalized Risk Assessment

Leveraging Data for Personalized Risk Assessment

Understanding the Fundamentals of Personalized Recommendations

Personalized recommendations are a cornerstone of modern e-commerce and digital services, aiming to enhance user experience and drive engagement. This approach leverages data analysis to tailor content, products, or services to individual preferences, needs, and behaviors. By understanding user data, businesses can predict what a user might want, providing highly relevant suggestions. This targeted approach can significantly improve conversion rates and customer satisfaction. The key is not just in collecting data, but in effectively processing and interpreting it to offer personalized experiences.

A crucial aspect of personalized recommendations is understanding the various types of data that can be utilized. This includes explicit feedback, such as ratings and reviews, and implicit feedback, such as browsing history and purchase patterns. Analyzing these data points allows for a deeper understanding of user preferences, enabling the development of more refined and effective recommendations. By carefully considering factors like context, time, and location, businesses can create even more relevant and valuable experiences. This detailed analysis is crucial for optimizing the recommendation system and achieving desired outcomes.

Implementing Effective Strategies for Personalized Recommendations

Several strategies can be employed to implement successful personalized recommendation systems. One common approach is collaborative filtering, which analyzes the preferences of similar users to predict what a user might like. Content-based filtering, on the other hand, focuses on the attributes of items and recommends similar items based on the user's past preferences. Hybrid approaches, combining these methods, often yield the best results, offering a more comprehensive and nuanced understanding of user needs.

Beyond these core strategies, businesses can also leverage machine learning algorithms to refine their recommendations. These algorithms, trained on vast datasets, can identify complex patterns and relationships within user data. The use of machine learning allows for more sophisticated predictions and recommendations, leading to improved user satisfaction and increased engagement. Furthermore, constantly monitoring and evaluating the performance of the recommendation system is essential for optimization and ensuring continuous improvement. The ability to adapt to evolving user preferences and trends is key to maintaining a high level of relevance.

A crucial element in the implementation of personalized recommendations is ensuring data privacy and security. Users need to trust that their data is handled responsibly and ethically. Transparency about data collection and usage practices is paramount to building trust and maintaining a positive user experience. Robust security measures are also essential to protect sensitive user data from unauthorized access or misuse.

Tailoring Interventions Based on Predicted Risk

Predictive Modeling for Early Identification

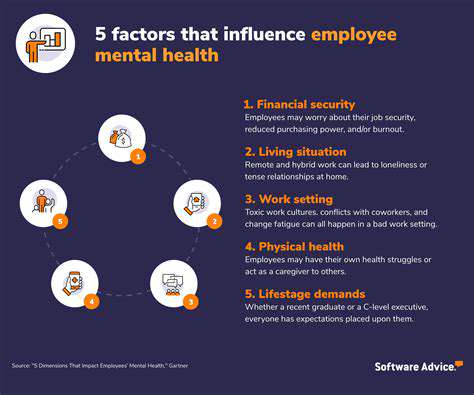

Utilizing AI algorithms to analyze vast datasets of risk factors, including demographic information, lifestyle choices, and social determinants of health, can pinpoint individuals at high risk for substance abuse. This allows for early intervention strategies that are more likely to be effective. Predictive modeling isn't about labeling individuals, but rather about identifying potential vulnerabilities and offering support proactively, preventing the escalation of substance use issues.

Identifying individuals at risk early allows for the implementation of personalized prevention programs. These programs can be tailored to the specific needs and circumstances of each individual, increasing the likelihood of success in reducing substance abuse and promoting overall well-being.

Personalized Intervention Strategies

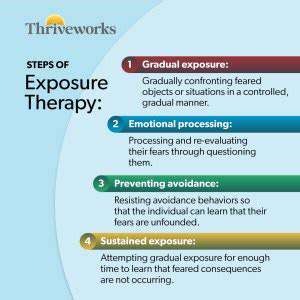

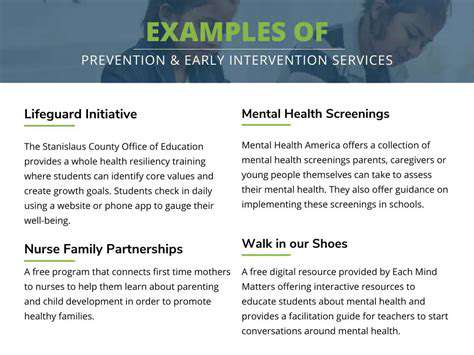

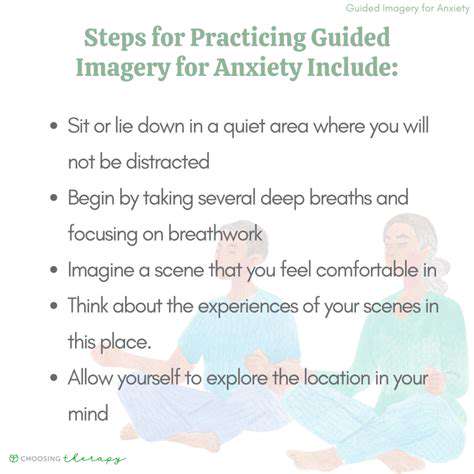

Once individuals are identified as potentially at risk, AI can help tailor intervention strategies to their specific needs. This might involve recommending specific support groups, connecting them with mental health resources, or providing access to educational materials relevant to their situation. The goal is not a one-size-fits-all approach, but rather a nuanced and targeted approach to address individual vulnerabilities.

Accessibility and Scalability

AI-powered interventions can be delivered through various channels, making them accessible to a wider population. Mobile applications, online platforms, and integrated healthcare systems can provide convenient and timely interventions. This scalability is crucial for reaching a significant number of individuals at risk, preventing widespread substance abuse problems.

The accessibility and scalability afforded by AI-driven interventions can significantly increase the reach of preventive measures, enabling more people to benefit from support and resources.

Monitoring and Evaluation of Progress

AI systems can continuously monitor the progress of individuals participating in intervention programs. This allows for adjustments to be made in real-time, ensuring that interventions remain effective and responsive to individual needs. Data analysis can highlight areas where interventions are not yielding desired results, facilitating necessary modifications and improvements.

Integration with Existing Healthcare Systems

Integrating AI-driven risk prediction and intervention tools into existing healthcare systems can streamline the process of identifying and supporting individuals at risk. This seamless integration reduces friction and ensures that individuals receive timely and appropriate care. This ensures that interventions are integrated into the existing healthcare flow, improving the likelihood of compliance and positive outcomes.

Ethical Considerations and Data Privacy

The use of AI for substance abuse prevention raises important ethical considerations, particularly regarding data privacy and the potential for bias in algorithms. Robust safeguards must be in place to protect the privacy and confidentiality of individuals' data. Regular audits and evaluations are essential to identify and mitigate potential biases in the AI models.

It is critical to address the ethical implications of using AI in substance abuse prevention to ensure responsible and equitable application of these technologies.

Ethical Considerations and Future Directions

Data Privacy and Security

Protecting user data is paramount in any application or service, and this is especially true when dealing with sensitive information. Robust data encryption and secure storage protocols are crucial to prevent unauthorized access and breaches. Thorough security audits and regular vulnerability assessments are essential to identify and mitigate potential risks. Furthermore, transparent data privacy policies that clearly outline how user data will be collected, used, and protected are vital for building trust and maintaining compliance with relevant regulations.

Transparency and Accountability

Users have a right to understand how their data is being used and who has access to it. Clear and concise explanations of data usage practices should be provided in easily accessible formats. Open communication channels for addressing concerns and feedback are essential, allowing users to feel empowered and confident in the ethical handling of their information. Furthermore, mechanisms for holding developers and organizations accountable for data breaches and misuse are necessary to foster trust and responsible practices.

Bias Mitigation in Algorithms

Algorithmic bias can lead to unfair or discriminatory outcomes. It's crucial to identify and mitigate bias in the design and training of algorithms to ensure fairness and equity. This requires careful consideration of the data used for training and the potential for biases to manifest in the outputs. Regular audits and evaluations of algorithms are necessary to detect and correct for any systemic biases.

Algorithmic Explainability and Interpretability

Understanding how algorithms arrive at their decisions is essential for building trust and ensuring accountability. Transparent and explainable algorithms allow users to understand the rationale behind the results, fostering greater confidence and reducing concerns about potential unfair or discriminatory outcomes. This includes developing methods for interpreting the complex internal workings of algorithms in a manner that is accessible to users and stakeholders.

Impact on Society and Individuals

The development and deployment of AI systems have significant social and individual implications. Careful consideration must be given to the potential societal impacts, considering issues such as job displacement and the widening of existing inequalities. Open dialogues and collaborative efforts between developers, policymakers, and the public are crucial for proactively addressing potential challenges and ensuring that AI benefits all members of society. Furthermore, ongoing monitoring and evaluation of the long-term impacts of AI systems are critical to ensuring responsible development and deployment.

Responsible Innovation and Development

Ethical frameworks and guidelines are essential to ensure responsible innovation and development in the AI field. Establishing clear ethical principles and best practices can help mitigate potential risks and ensure that AI systems are developed and used in a manner that aligns with societal values and goals. Collaboration among researchers, developers, and ethicists is vital for fostering a culture of ethical considerations throughout the entire AI lifecycle.

Read more about AI for Substance Abuse Prevention: Personalized Risk Assessment

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers