AI and Empathy: Can Technology Truly Support Mental Health?

Beyond the Basics: AI in Personalized Therapy

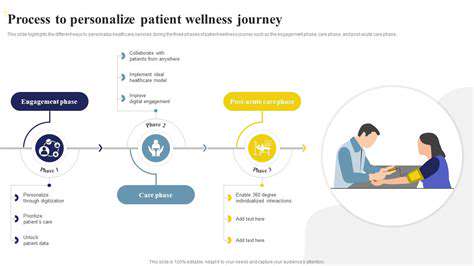

Artificial intelligence (AI) is rapidly evolving, and its potential applications in therapy extend far beyond simple chatbot interactions. AI can analyze vast amounts of data to tailor treatment plans to individual needs, offering a level of personalization that was previously unimaginable. This data-driven approach can consider factors like a patient's unique history, current circumstances, and preferences to develop a highly individualized therapeutic strategy. By leveraging machine learning algorithms, AI can identify patterns and predict potential challenges, enabling therapists to intervene proactively and improve outcomes. This personalized approach can significantly enhance the therapeutic experience, leading to a more effective and satisfying journey for the patient.

Furthermore, AI-powered tools can provide therapists with valuable insights and support. These tools can assist with tasks like scheduling appointments, managing patient records, and even providing preliminary assessments. This allows therapists to focus more on the therapeutic relationship and less on administrative burdens. This increased efficiency can lead to better patient care and more effective use of therapeutic resources. The integration of AI tools can also empower therapists to track progress more effectively and adapt interventions based on real-time data.

AI-Augmented Therapeutic Techniques

Beyond personalization, AI can augment existing therapeutic techniques, offering new avenues for intervention. AI-powered platforms can facilitate cognitive behavioral therapy (CBT) exercises, providing customized feedback and support to patients in real-time. This personalized feedback loop can significantly enhance the effectiveness of CBT techniques, allowing patients to identify and address negative thought patterns more effectively. This personalized approach to therapy can lead to faster progress and more positive outcomes for patients.

AI can also be used to develop innovative therapeutic techniques. For example, AI-driven simulations could create safe and controlled environments for patients to practice coping mechanisms in simulated real-world scenarios. This can be especially valuable for patients dealing with anxiety or trauma, allowing them to confront challenging situations in a supportive and controlled environment. This type of simulation can offer valuable preparation and rehearsal for real-world situations.

Imagine a future where AI tools provide ongoing support and encouragement, offering personalized feedback and prompts to help patients stay on track during challenging periods. This can be particularly important for individuals dealing with chronic conditions or long-term mental health challenges. AI can provide consistent support, fostering a sense of empowerment and accountability.

This ongoing support system can significantly enhance the therapeutic experience, offering a sense of continuity and personalized encouragement that extends beyond traditional therapy sessions.

AI-powered tools can also assist in the development of tailored relaxation exercises or mindfulness practices, offering personalized guidance and feedback to promote relaxation and stress reduction.

Ethical Considerations and the Future of AI in Mental Health

Bias and Fairness in AI-Powered Mental Health Tools

The development and deployment of AI tools in mental health must prioritize fairness and mitigate bias. AI models are trained on data, and if that data reflects existing societal biases, the AI system may perpetuate and even amplify those biases. For example, if a dataset predominantly features diagnoses of depression from a specific demographic group, the AI might be more likely to misdiagnose or misinterpret symptoms in individuals from other groups, leading to inequitable access to care and potentially harmful misdiagnosis. Careful selection and curation of training data, along with ongoing evaluation and auditing of AI algorithms, are crucial to ensure that these tools are used equitably and ethically across diverse populations.

Furthermore, ensuring transparency and explainability in AI decision-making is essential. Understanding *how* an AI tool arrives at a particular diagnosis or recommendation fosters trust and allows for human oversight. Without this transparency, it becomes challenging to identify and address potential biases or errors, potentially jeopardizing the quality and reliability of the mental health care provided. The development and implementation of AI-driven tools should prioritize the inclusion of diverse voices and perspectives throughout the process to promote fairness and prevent bias.

Data Privacy and Security in AI Mental Health Applications

Patient data is inherently sensitive, and the use of AI in mental health necessitates stringent data privacy and security protocols. Protecting the confidentiality and anonymity of patient information is paramount, and robust encryption and secure storage methods must be employed to prevent unauthorized access and breaches. Data anonymization techniques, along with adherence to relevant privacy regulations like HIPAA (in the US) and GDPR (in Europe), are crucial to safeguard patient information and maintain public trust.

Furthermore, clear and concise policies regarding data usage, access, and sharing should be established and communicated transparently to patients. The use of patient data should be strictly limited to the purpose for which it was collected, and patients should have the right to access, correct, or delete their data. The development of AI applications should incorporate privacy by design principles, ensuring that data protection is embedded in the system from the outset, rather than as an afterthought. This fosters a more ethical and trustworthy environment for the use of AI in mental health care.

The Role of Human Oversight and Collaboration

AI tools should be viewed as augmenting, rather than replacing, human clinicians. Human oversight and collaboration are essential to ensure that AI-driven mental health tools are used responsibly and effectively. Clinicians need training and support to effectively interpret and utilize the information provided by these tools, and to maintain the critical judgment and emotional intelligence necessary for providing holistic care.

AI can assist with tasks like screening, symptom monitoring, and personalized recommendations, but the final diagnosis and treatment decisions must remain in the hands of qualified mental health professionals. The integration of AI tools should empower clinicians, not diminish their role. This collaborative approach emphasizes the crucial human element of empathy and understanding, which remains vital in mental healthcare.

Accessibility and Affordability for Diverse Populations

Ensuring that AI-powered mental health tools are accessible and affordable for diverse populations is critical. Digital divides and socioeconomic disparities can create barriers to accessing these technologies. The development of affordable, accessible, and culturally sensitive AI solutions is essential to ensure equitable access to care. This requires considering factors such as language barriers, technological literacy, and geographic limitations.

Potential Impact on Mental Health Professionals and the Future of Therapy

The integration of AI in mental health may lead to shifts in the roles and responsibilities of mental health professionals. AI could potentially automate certain tasks, freeing up clinicians to focus on more complex aspects of care. However, the potential for job displacement or changes in the scope of practice requires careful consideration. Continued research and discussion regarding the future of mental health professions in the age of AI are vital.

The future of mental healthcare likely involves a hybrid model, where AI tools augment human expertise. This collaborative approach could lead to more accessible, efficient, and personalized care for individuals across various demographics and socioeconomic backgrounds. Ethical considerations and careful implementation strategies are crucial to ensure that this integration benefits individuals and society as a whole.

Read more about AI and Empathy: Can Technology Truly Support Mental Health?

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers