AI Powered Emotional Intelligence: Developing Empathy and Connection

Decoding Emotional Signals in Machines: A Deep Dive

Understanding how machines interpret and respond to emotional signals is a rapidly evolving field. This area of research delves into the complex interplay between artificial intelligence, human psychology, and the ever-increasing sophistication of technological interfaces. The ability to accurately decipher emotional cues could revolutionize everything from customer service to healthcare.

Current methods often rely on analyzing facial expressions, vocal intonations, and physiological data to gauge emotional states. However, the accuracy and reliability of these methods are still under scrutiny and require further development.

The Importance of Context

Context plays a crucial role in interpreting emotional signals, whether from humans or machines. A simple facial expression, for instance, can convey vastly different emotions depending on the surrounding circumstances. Similarly, machine learning algorithms need to be trained on diverse datasets to accurately interpret context-dependent emotional cues.

Considering the cultural and individual variations in emotional expression is essential for building robust systems. For example, a smile may signify happiness in one culture but politeness in another.

Machine Learning Models for Emotion Recognition

Various machine learning models are employed to analyze emotional signals from machines. These models typically involve training algorithms on large datasets of labeled emotional responses. This process allows the algorithms to identify patterns and correlations between input data and corresponding emotional states.

Deep learning architectures, particularly convolutional neural networks, have shown promising results in recognizing facial expressions and vocal tones. However, further research is necessary to expand their capacity to other modalities and contexts.

Challenges and Limitations in Current Approaches

Despite progress, several challenges remain in accurately decoding emotional signals from machines. One significant hurdle is the inherent complexity of human emotions, which can be multifaceted and difficult to quantify. Furthermore, the lack of standardized datasets and benchmarks for evaluating emotional recognition models hinders the development of reliable methods.

Another challenge is the potential for bias in machine learning algorithms, which can lead to inaccurate or unfair interpretations of emotional signals.

Ethical Considerations

As emotional recognition technology progresses, ethical considerations become increasingly important. The potential misuse of this technology, particularly in surveillance or discriminatory applications, needs careful consideration.

Transparent and accountable systems are crucial to ensure responsible development and deployment of emotion recognition technologies. This includes clear guidelines on data privacy and user rights.

Applications in Various Fields

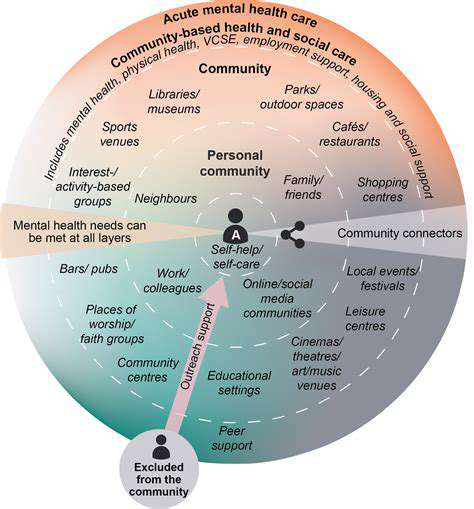

The potential applications of emotion recognition technology are vast and span numerous fields. In customer service, it could lead to more personalized and empathetic interactions. In healthcare, it could help diagnose and treat mental health conditions. Furthermore, emotion recognition in machines could revolutionize human-computer interaction, creating more intuitive and responsive interfaces.

Future Directions and Research

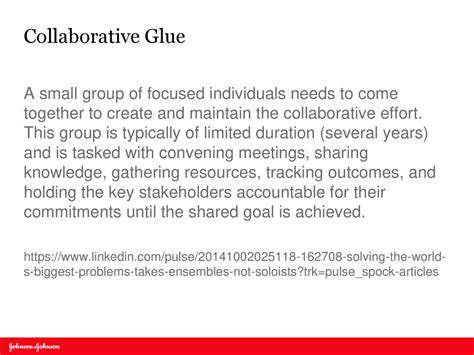

Future research should focus on developing more robust and reliable methods for emotion recognition in machines. This includes exploring new data sources, improving algorithm performance, and addressing ethical concerns. The ultimate goal is to develop systems that can not only accurately decode emotional signals but also understand their nuances and context.

Further research should also investigate the potential for using emotion recognition to improve user experience in diverse fields like education, entertainment, and even robotics.

Community-led conservation initiatives often prioritize the well-being of local communities, recognizing that sustainable tourism can significantly enhance their livelihoods. This involves creating economic opportunities that extend beyond the typical tourist experience. For instance, local artisans can be empowered to sell their handcrafted goods directly to tourists, fostering a deeper connection with the culture and generating income for the community. By promoting fair trade practices and supporting local businesses, initiatives can ensure that the benefits of tourism are equitably distributed, improving the overall quality of life for residents.

Ethical Considerations and the Future of Human-AI Interaction

Transparency and Explainability

A crucial ethical consideration in the burgeoning field of human-AI interaction is transparency. Users need to understand how AI systems arrive at their decisions, especially when those decisions impact their lives. Lack of transparency can lead to a loss of trust and a feeling of powerlessness. This is particularly critical in sensitive applications like healthcare, finance, and criminal justice, where the consequences of AI-driven errors can be significant. Furthermore, explainable AI (XAI) techniques are essential to build trust and accountability in the system. Clear communication of the reasoning behind AI's actions is paramount for responsible AI development.

The ability to explain AI's decision-making processes goes beyond simply providing output; it involves making the underlying logic accessible and understandable. This requires careful design and the development of methods that can articulate the steps taken by the AI in a way that is meaningful to human users. In the future, this will be a key factor in ensuring that AI systems are used ethically and responsibly.

Bias and Fairness in AI Systems

AI systems are trained on data, and if that data reflects existing societal biases, the AI system will likely perpetuate and even amplify those biases. For example, if an AI system used to assess loan applications is trained on data that disproportionately shows people of a certain race or gender being denied loans, it will likely make similar discriminatory decisions in the future. This is a critical ethical concern, impacting access to opportunities and potentially exacerbating existing inequalities.

Addressing algorithmic bias requires careful data curation, diverse datasets, and ongoing monitoring. Developers must actively seek out and mitigate biases in their training data, and continuously assess the AI systems for fairness and equity. This is a complex challenge, but one that is essential for ensuring responsible and equitable AI deployment.

Data Privacy and Security

AI systems often rely on vast amounts of personal data to function effectively. Ensuring the privacy and security of this data is paramount. Users must have control over their data and be aware of how it is being used by AI systems. Strong data protection regulations and robust security measures are essential to prevent misuse and unauthorized access. Violation of privacy can lead to significant harm to individuals and societies, and hence, it is a crucial ethical consideration.

Future development of AI systems must prioritize user data security and privacy. This includes creating clear and understandable data usage policies, implementing strong encryption protocols, and establishing mechanisms for user data control. Furthermore, fostering transparency and accountability in data handling practices will be vital to building trust.

Accountability and Responsibility

Determining who is responsible when an AI system makes an error or causes harm is a complex ethical question. Is it the developer, the user, or the AI system itself? Establishing clear lines of accountability is essential for mitigating potential harm and ensuring responsible AI development. As AI systems become more autonomous and sophisticated, the question of responsibility will only become more complex.

Future research and policy development should focus on defining clear roles and responsibilities in the AI development and deployment cycle. This includes establishing guidelines for liability in case of harm, creating mechanisms for redress, and developing training programs for AI developers on ethical considerations.

Impact on Human Employment and Society

The integration of AI into various sectors is predicted to automate many tasks currently performed by humans. This raises concerns about the potential impact on employment and the social fabric of society. Addressing these concerns requires careful planning and proactive measures to prepare for the potential shifts in the job market, including retraining programs, social safety nets, and policies that encourage the development of new, AI-augmented jobs.

Furthermore, the future of human-AI interaction must consider the potential impact on human well-being, societal structures, and the overall quality of life. The impact of AI on the economy, the environment, and the way humans interact with each other needs careful study and consideration to ensure a just and equitable future.

Read more about AI Powered Emotional Intelligence: Developing Empathy and Connection

Hot Recommendations

- AI Driven Personalized Sleep Training for Chronic Insomnia

- AI Driven Personalization for Sustainable Stress Management

- Your Personalized Guide to Overcoming Limiting Beliefs

- Understanding Gender Dysphoria and Mental Health Support

- The Power of Advocacy: Mental Health Initiatives Reshaping Society

- Building a Personalized Self Compassion Practice for Self Worth

- The Ethics of AI in Mental Wellness: What You Need to Know

- AI Driven Insights into Your Unique Stress Triggers for Personalized Management

- Beyond Awareness: Actionable Mental Health Initiatives for Lasting Impact

- Creating a Personalized Sleep Hygiene Plan for Shift Workers